- Network Effects

- Posts

- Cohere: The Full-Stack AI Built for Privacy and Control

Cohere: The Full-Stack AI Built for Privacy and Control

Why Cohere is Betting on Enterprise Control over AI

Welcome to the 1st Network Effects Newsletter.

If you're new here, this newsletter is all about unpacking the vision, strategy, and execution behind the world's leading tech companies.

Today, we're diving into Cohere, founded by Aidan Gomez (CEO), Nick Frosst, and Ivan Zhang in 2019.

Let's dive in.

Overview

Cohere builds enterprise-grade AI models (Family of Models) and applications (North and Commoand), prioritizing privacy, security, multilingual support, and verifiability.

Cohere distinguishes itself from other hyperscaler offerings by prioritizing customizability and enterprise data control, as it believes that it is not sufficient to simply adopt and then fine-tune an off-the-shelf consumer AI chatbot for a work environment. Cohere focuses on building customized technology and tailored models with their customers to meet their specific needs.

To date, Cohere has partnered with allied government agencies and leading global companies such as Oracle, RBC, and Fujitsu, focusing on deep, customizable, accessible and cloud-agnostic solutions for businesses to deliver immediate practical value

Thesis - Growing Demand for Private & Customized AI

One of the main obstacles to AI adoption is concern about data security and privacy, particularly in regulated industries like government, finance, energy, and healthcare. People will only use LLMs in sensitive sectors if they have confidence in its security.

LLMs are only as useful as the knowledge and data they have access to. Without robust security that enables access to a full view of an enterprise’s data, the utility of AI systems is fundamentally constrained. With our focus on privacy and security, Cohere enables organizations to build capabilities with AI that are able to reach their most sensitive and valuable data assets.

Cohere addresses this critical need by providing a "menu" of AI tools specifically designed for enterprises. This approach allows organizations to build, fine-tune, and deploy large language models directly on their existing infrastructure, ensuring data sovereignty and mitigating the security risks associated with data sharing. By offering this level of control, Cohere empowers enterprises to leverage the transformative potential of AI without compromising their stringent security and compliance protocols. This resonates particularly with organizations seeking to innovate responsibly within complex regulatory landscapes.

Genesis Story

Cohere was founded in 2019 by Aidan Gomez, Ivan Zhang, and Nick Frosst. Gomez, a former researcher at Google Brain, co-authored the landmark 2017 paper “Attention is All You Need” which introduced the transformer architecture now foundational to all state-of-the-art LLMs.

Gomez and Frosst met at Google Brain, while Zhang, a former University of Toronto student and engineer, joined them to build Cohere with the shared belief that foundational AI technology should be accessible and customizable for enterprise use, not locked into closed ecosystems.

"Starting Cohere, Nick, Ivan, and I... the whole objective was to bring this tech, large language models [LLMS] to industry; bring it to the world, and facilitate adoption. Nobody really knew what large language models were, it was a research project, interesting tech, but no real commercial application. But I think we had conviction on the trajectory and the pace of progress and that computers would get increasingly good at manipulating language and we would quite soon arrive at a place where computers could speak our language”

Product & Services

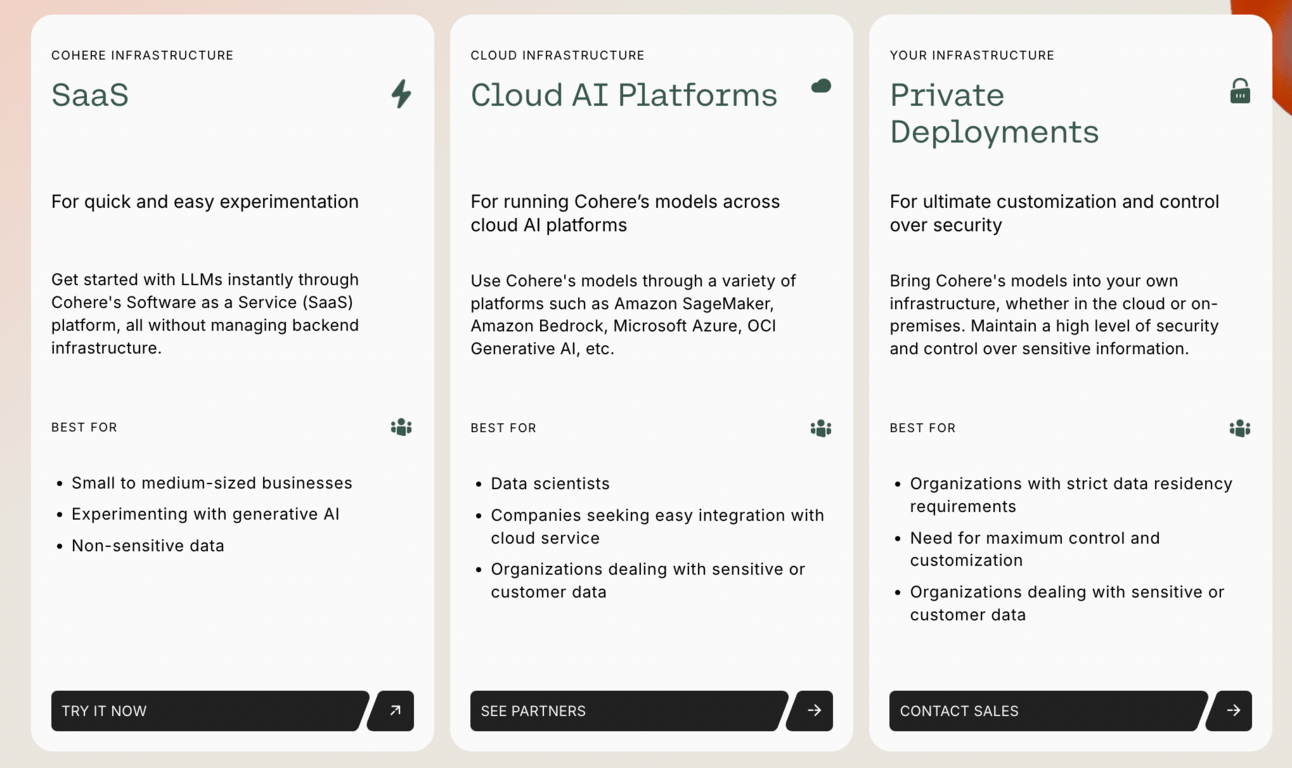

Cohere is building the enterprise layer of the AI stack across three dimensions: generative models, advanced retrieval models, and workplace systems, covering the infrastructure services to interface applications for the enterprise users, with an emphasis on latency, privacy, and infrastructure flexibility

Generative Models

Cohere’s foundation begins with its Command family of high-performance language models, optimized for retrieval-augmented generation (RAG), including Command A & Command R+. Command A is an LLM built by Cohere, which is on par or better than GPT-4o and DeepSeek-V3 across agentic enterprise tasks, with greater efficiency. Command R+ is purpose-built for use cases like summarization, semantic search, and QA across sprawling internal knowledge systems.

Example: A global bank uses Command R+ to generate client-ready research memos from internal analyst notes and market news, while Aya ensures the same content can be localized for regulators and stakeholders across Europe and Asia

Advanced Retrieval Models

Enterprises don’t just need generation—they need grounding. Cohere’s Embed model is a leading multimodal retrieval tool that transforms unstructured data into accessible knowledge. It enables highly accurate semantic search and underpins complex retrieval-augmented generation (RAG) systems. Paired with Rerank, a refinement layer that boosts search result relevance, this retrieval stack ensures AI outputs are rooted in enterprise context. These models are foundational for use cases in legal research, financial document analysis, and knowledge management, where trust and precision are paramount.

Example: A Big Four accounting firm uses Embed + Rerank to build a document search engine across millions of internal audit reports, reducing analyst research time while increasing accuracy and compliance during client engagements.

Workplace Systems

The workplace system represents the AI interface for end-users. North is an integrated AI platform that acts as a productivity co-pilot, helping employees interact with internal systems, automate tasks, and retrieve information through natural language. Compass extends this by surfacing real-time business insights from fragmented data sources, effectively becoming the enterprise’s intelligent knowledge layer.

Example: A Fortune 500 insurer deploys North as a secure AI assistant for 20,000+ employees to automate internal ticketing, draft compliance communications, and access policies

Markets

The generative AI market is experiencing one of the fastest growth curves in enterprise tech history. IDC forecasts global AI spending to exceed $632 billion by 2028, growing at a 29% CAGR, with a significant share of that capital earmarked for foundational model infrastructure and enterprise-grade deployments.

We segment this opportunity into two high-leverage vectors:

LLM Infrastructure & APIs: As enterprises shift from experimentation to deployment, demand is accelerating for reliable, performant APIs that can be embedded into proprietary products, internal tooling, and operational workflows. Cohere’s infra-agnostic, enterprise-first model directly addresses this need, serving as a middleware layer between raw AI capability and business constraints (e.g., latency, compliance, data security).

Verticalized AI Solutions: Regulated industries like finance, legal, and healthcare present unique friction points: domain specificity, strict compliance requirements, and sensitive data environments. These constraints increase the demand for customizable, fine-tuned models and secure deployment options (e.g., VPC, on-prem). Cohere’s architecture and multilingual model suite are well-suited to meet this demand.

Importantly, the market is shifting from exploratory pilots to full-scale deployments. A McKinsey 2024 GenAI Pulse survey found that 75% of early adopters are now embedding LLMs into live production systems. As the market stratifies between horizontal model providers and verticalized AI platforms, Cohere is positioned to own the infrastructure layer that enterprise AI is being built on top of, much like Snowflake did for analytics.

“We know the market will need an independent, not tied to any of the hyperscalers platform to enable enterprise to adopt this technology in a way that’s extremely data private and secure"

Competitions

Cohere operates at the intersection of foundational AI infrastructure and enterprise deployment—an arena increasingly shaped by verticalized competitors building systems for end-point focused knowledge work. The competitive set is no longer just LLM providers such as OpenAI or Anthropic—it’s also startups with a deep focus on the interface, delivering value to the end-user. Three notable companies—Harvey AI, Hebbia AI, and Glean—stand out as emerging threats and signals of how the enterprise AI landscape is fragmenting by use case and control layer.

Harvey AI is one of the leading enterprise AI applications focused on professional law firms. Backed by OpenAI and Sequoia, Harvey has rapidly become the default LLM-native copilot for global law firms. Its advantage lies in product depth and specialization—integrating tightly with legal workflows, redlining, case search, and document generation. Rather than offering an API, Harvey owns the UX layer and delivers a full-stack system tailored to legal teams. It follows a wedge strategy that expands from one function (legal research) into adjacent needs (contract automation, litigation strategy), and later towards other professional services.

Hebbia AI is building a semantic operating system for financial services. Its flagship product blends RAG, search, and spreadsheet-like querying to create a powerful analyst tool for private equity firms, hedge funds, asset managers and consultancies. It follows a model-agnostic design where users can toggle between providers for optimal performance and cost-efficiency.. Its true edge lies in Hebbia’s domain-informed product design, where they build workflows based on domain expertise to enable the translation of sophisticated, multi-step workflows into single-command outcomes, making it indispensable for time-constrained analysts.

Glean represents the most direct horizontal threat to Cohere’s retrieval ambitions. Founded by ex-Google Search engineers, Glean builds internal enterprise search solutions that integrate and unify knowledge across databases, communication channels, and knowledge bases through fine-tuned semantic embeddings. Its traction with large enterprises stems from its seamless integrations with productivity suites like Google Workspace and Microsoft 365, coupled with enterprise-grade security and relevance tuning. Glean’s focus on embedding itself into the core knowledge fabric of an organization makes it a potential platform rather than a feature, and positions it to compete with Cohere’s Compass and retrieval model offerings.

Business Model

Cohere makes money by offering its AI models to enterprises via API with a tiered pricing model.

Source: Cohere

#1 Free Plan

The company's Free Plan targets developers and small projects, providing rate-limited access to all API endpoints and community-based support through Discord, all at no cost.

#2 Production Plan

For businesses requiring more advanced features and scalability, the production plan offers elevated ticket support, increased rate limits, and the option to train custom models. Pricing under this plan is pay-as-you-go, with costs per 1 million tokens set at $1.50 for input models and $2.00 for output models.

#3 Enterprise Plan

The Enterprise Plan is aimed at large corporations and offers custom pricing based on dedicated model instances, specialized support channels, and a variety of deployment options, requiring a consultation with the sales team for a tailored quote. In addition to these tiered plans, Cohere has specific endpoint pricing that varies according to the type of service, such as text generation or classification:

This multi-tier approach allows Cohere to capture revenue from a wide spectrum of clients, ranging from individual developers to large enterprises. Existing enterprise customers include RBC, TD, Salesforce, Notion, Jasper, McKinsey & Company, LG Electronics, and more.

Valuations & Fundraising

In June 2024, Cohere had raised $450M at a near $5B valuation from AMD Ventures, Export Development Canada, and Fujitsu, bringing its total funding to over $900 M.

The lead investors for the previous round include Index Venture (Series A), Tiger Global (Series B), and iNovia Capital (Series C)

Key Opportunities

A. Vertical AI Adoption Acceleration

As enterprises increasingly integrate AI into their core operations, Cohere is well-positioned to benefit from the demand for production-grade, purpose-built AI solutions. Customizing LLMs to fit specific industry needs not only enhances the value these models provide but also increases switching costs for customers. Additionally, the growing network effects from Cohere’s user base create strong, long-term customer relationships, further cementing the platform’s position as an integral part of enterprise operations.

B. International Expansion with GTM Partners

Europe and Asia account for 60% of global GDP, providing a vast runway for market growth. Cohere’s multilingual embeddings and adaptable deployment options make it well-positioned to capitalize on these high-potential markets. With the right strategic alliances, such as securing Fujitsu as an investor and partnering with global consultancies like McKinsey & Accenture, Cohere can successfully navigate regulatory complexities and data residency challenges. This will enable the company to become the go-to AI provider for enterprises in these regions, offering robust solutions that prioritize data privacy and control.

C. Sovereign Infrastructure Development

In December 2024, the Government of Canada announced a $240 million CAD investment in Cohere to support the development of a multibillion-dollar AI data center—part of the country’s broader $2 billion Sovereign AI Compute Strategy. This initiative will allow Cohere to train future generations of large language models (LLMs) within Canadian borders, offering a critical advantage for enterprises navigating data residency, compliance, and national security requirements.

As governments and enterprises increasingly seek alternatives to U.S.-centric cloud providers, Cohere’s participation in this sovereign infrastructure project, alongside its strategic partnership with the Canadian government, acts as both a defensive moat and a strategic growth lever, particularly in regulated markets where data sovereignty is non-negotiable.

Key Risks

1. Product Velocity & Depth of Platform Use Cases Design

Command R+ and Embed v3 are powerful primitives, but real value comes when enterprises can plug those into workflows that drive outcomes. That means Cohere needs to maintain a high product velocity not just in model improvements, but in how quickly it can stand up domain-specific tools across verticals. Every enterprise use case — from legal document review to financial risk analysis — demands a unique UX, tuned evaluations, and deployment path. The companies that win in this space aren’t just shipping APIs — they’re co-building with customers to define what "AI-enabled" actually looks like in production.

2. Enterprise AI GTM Competition

The enterprise AI race is hyper-competitive. Cohere must continue to differentiate from other vertical AI applications, including Hebbia and Harvey AI, and traditional LLM providers such as OpenAI and Anthropic, who could also roll out enterprise products in the future, which may have large implications for Cohere to protect and expand its market share

3. Scalability of the Current Customization Service Model

Cohere's core differentiation lies in its commitment to deep customization and tailored AI solutions for enterprise clients, particularly in regulated industries. While this approach addresses critical concerns around data privacy and specific use-case requirements, it inherently presents a significant risk to the company's scalability compared to competitors offering more standardized, out-of-the-box solutions.

The highly bespoke nature of Cohere's onboarding and deployment processes necessitates a more resource-intensive approach. Each new enterprise client likely requires a significant investment of time and personnel for solution design, development, deployment and support.

Thanks for reading till the end of the issue. Subscribe to follow the next deep dive on Jobber, the vertical SaaS company for home service SMBs.

Key Resources